Behind the Scenes of Our Terraform-Powered Deployments

At CLOUDETEER, Terraform is our go-to tool for Infrastructure as Code (IaC). In this post, we share how we’ve built a secure, standardized, and scalable deployment pipeline for Azure – avoiding static secrets and focusing on transparency, reuse, and best practices.

We offer an in-depth look into our solution – complete with code placeholders so you can replicate or adapt the components for your own use case.

Above: Architecture diagram illustrating the overall solution.

Starting Point: Fragmented Setups and Security Gaps

Legacy Patterns and Their Drawbacks

In earlier projects, our deployment setups were often tailored to individual clients. Some used GitHub public runners, others had manually configured private runners. While Terraform state was stored consistently in Azure Blob Storage, authentication mechanisms varied and often relied on static credentials – lacking proper RBAC and introducing security and maintenance risks.

A key issue was the ability to run Terraform locally. Developers sometimes made direct changes to the production state from their workstations – possibly using unversioned code. This reduced traceability, caused drift, and violated core security principles.

Many of these setups relied on Azure Service Principals with ARM_CLIENT_ID and ARM_CLIENT_SECRET. These secrets had to be managed manually, could expire or be compromised, and contradicted Zero Trust principles.

There was also no unified solution for the initial provisioning of infrastructure for new customers. Each environment was essentially its own isolated setup.

Our Requirements

We defined a target architecture with the following principles:

- No static credentials – no secrets in code or CI/CD workflows

- Deployments only via GitHub Actions – no local apply

- Private Azure Blob Storage for Terraform state – with RBAC, encryption, and network restrictions

- GitHub self-hosted runners – full control over runtime and networking

- Central Terraform modules – to establish standards, reduce maintenance, and simplify onboarding

Implementation Details

All example code shown below has been tested with the azurerm provider version 4.26.0. While it is likely to work with newer versions, breaking changes cannot be ruled out.

terraform {

required_providers {

azurerm = {

version = "4.26.0"

}

}

}

Next, configure the Terraform provider itself. Make sure to replace the placeholder values for subscription_id and tenant_id with the correct values for your environment.

We enable storage_use_azuread because the storage account bellow will define shared_access_key_enabled = false. This enforces secure access via Azure Entra ID (formerly Azure AD).

provider "azurerm" {

subscription_id = "00000000-0000-0000-0000-000000000000"

tenant_id = "00000000-0000-0000-0000-000000000000"

storage_use_azuread = true

features {}

}

Before diving into the individual components, we define a set of foundational resources. These include a Resource Group, a Virtual Network, and a Subnet – all of which are referenced in multiple code examples throughout this article.

resource "azurerm_resource_group" "this" {

location = "germanywestcentral"

name = "rg-launchpad"

}

resource "azurerm_virtual_network" "this" {

name = "vnet-launchpad"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

address_space = ["192.168.42.0/27"]

}

resource "azurerm_subnet" "this" {

name = "snet-launchpad"

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = ["192.168.42.0/28"]

}

1. GitHub Actions + Azure Workload Identity Federation

A cornerstone of our setup is authenticating GitHub Actions workflows using Azure Workload Identity Federation. Instead of storing static secrets, our workflows authenticate directly via OpenID Connect (OIDC).

Benefits

- No need for secret management

- Federation is limited to specific environments (e.g.

prod-azure,prod-azure-plan) - Full integration with Azure RBAC

- Audit logging, Conditional Access, and traceability

Terraform Example: Managed Identity + Federated Credentials

The following snippet shows how to define a managed identity and configure federated credentials to allow GitHub Actions to authenticate securely with Azure – without using secrets.

variable "runner_github_repo" {

type = string

}

resource "azurerm_user_assigned_identity" "this" {

name = "id-launchpad"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

}

resource "azurerm_federated_identity_credential" "this" {

for_each = toset(["prod-azure", "prod-azure-plan"])

name = each.key

audience = ["api://AzureADTokenExchange"]

issuer = "https://token.actions.githubusercontent.com"

parent_id = azurerm_user_assigned_identity.this.id

resource_group_name = azurerm_user_assigned_identity.this.resource_group_name

subject = "repo:cloudeteer/${var.runner_github_repo}:environment:${each.key}"

}

To enable this identity to manage Azure resources, appropriate Azure IAM permissions must be assigned. By default, we assign the built-in Owner role at a management group level, granting full administrative access across all subscriptions within the group.

ℹ️ NOTE – You need unrestricted

Ownerpermissions by yourself to assign owners.

variable "management_group_name" {

type = string

}

resource "azurerm_role_assignment" "management_group_owner" {

principal_id = azurerm_user_assigned_identity.this.principal_id

role_definition_name = "Owner"

scope = var.management_group_name

}

Alternatively, permissions can be scoped more narrowly to a specific subscription or resource group, depending on the principle of least privilege and the actual requirements of the workload.

# variable "subscription_id" {

# type = string

# }

# resource "azurerm_role_assignment" "subscription_owner" {

# principal_id = azurerm_user_assigned_identity.this.principal_id

# role_definition_name = "Owner"

# scope = var.subscription_id

# }

# resource "azurerm_role_assignment" "resource_group_owner" {

# principal_id = azurerm_user_assigned_identity.this.principal_id

# role_definition_name = "Owner"

# scope = azurerm_resource_group.this.id

# }

💡 TIP – In some cases, assigning the built-in

Contributorrole may be more appropriate, as it provides the ability to manage resources without granting access to role assignments or access policies.

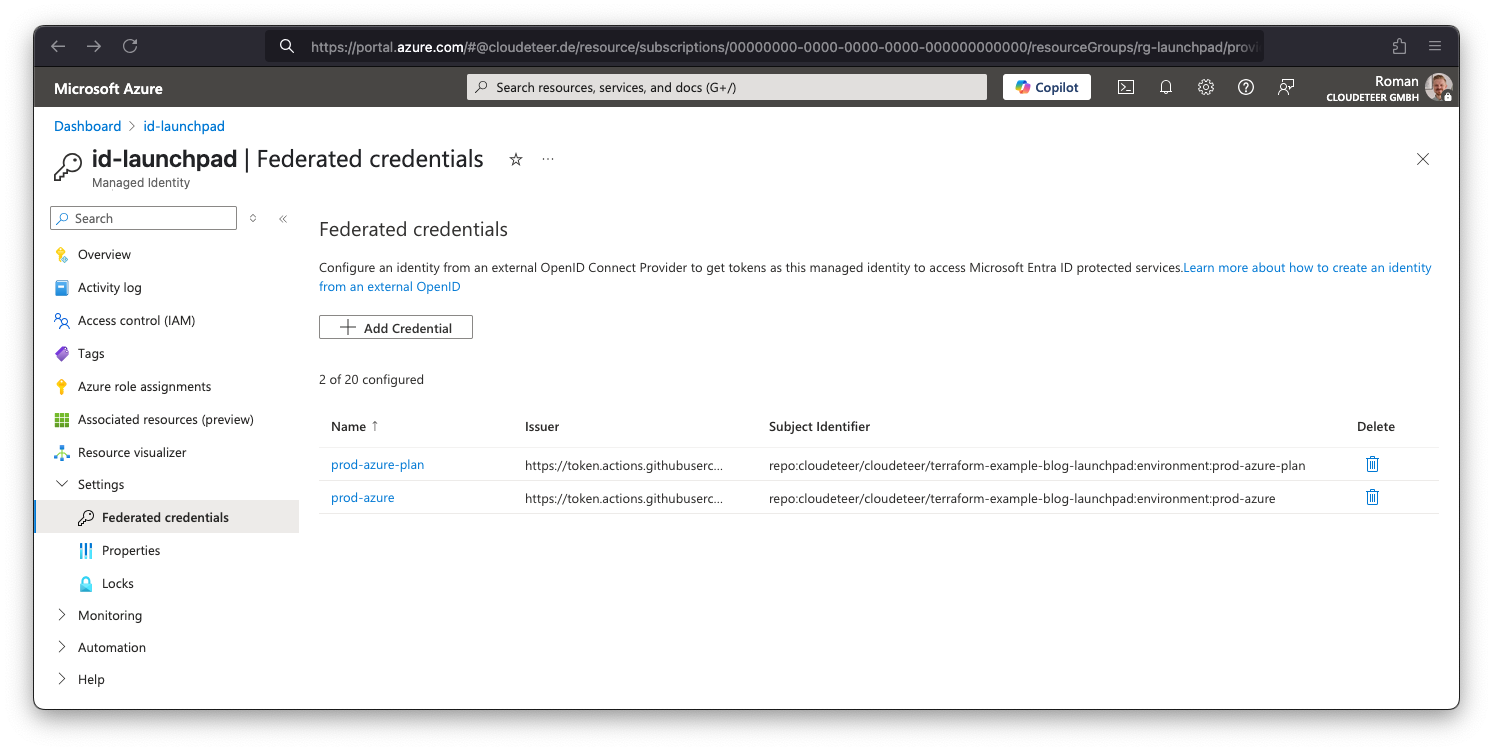

Screenshots: Federated Credentials + GitHub Setup

The screenshot below showcases the Federated Credentials configuration in the Azure Portal, as provisioned by the Terraform code above. These credentials enable GitHub Actions to authenticate securely via OpenID Connect (OIDC) without relying on static secrets. The setup enforces least-privilege access by restricting the binding to specific repositories, environments, and subject claims, ensuring both security and traceability.

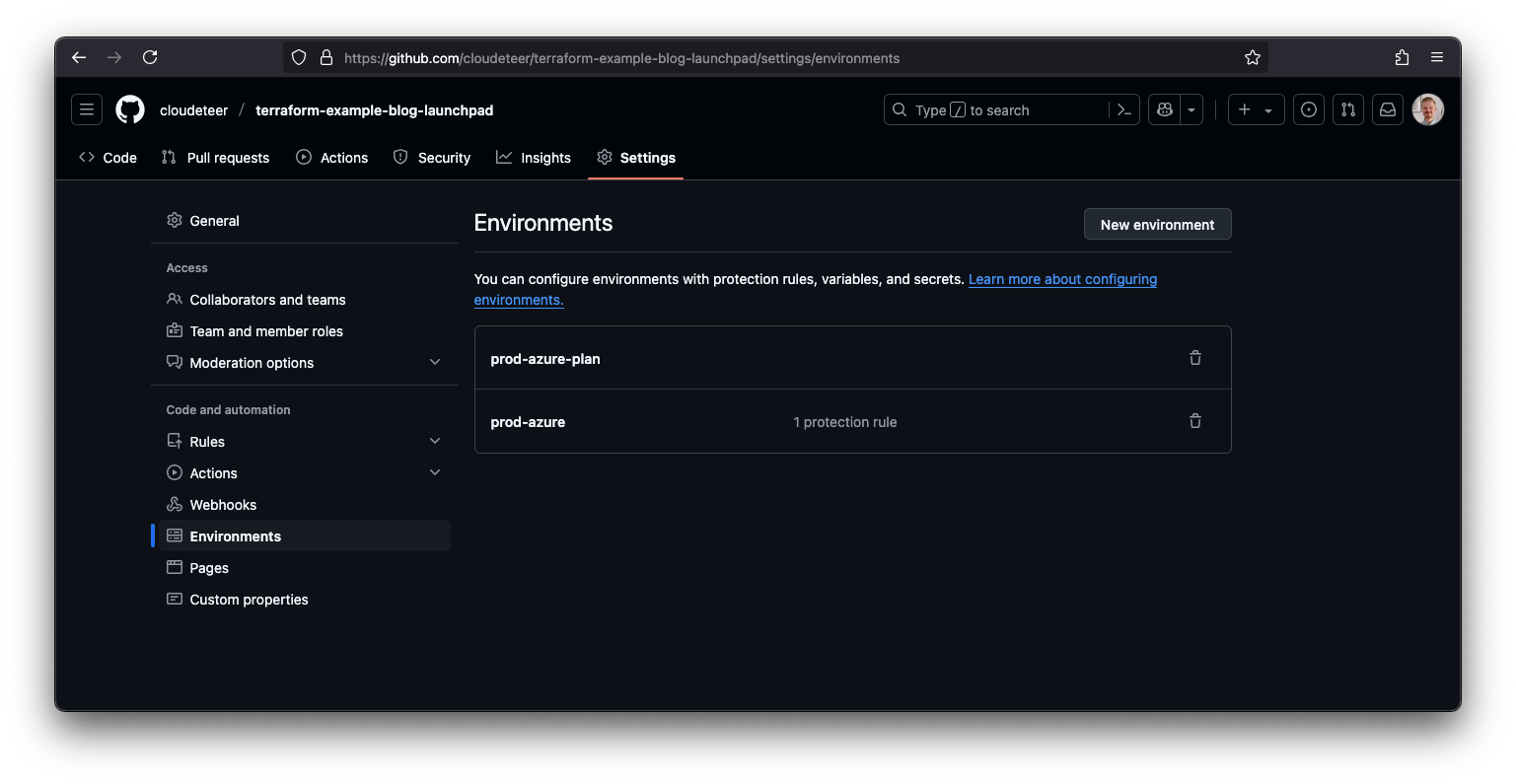

The second screenshot shows the configuration of GitHub Environments. We use separate environments like prod-azure and prod-azure-plan to logically isolate different stages of the deployment process. These environments are referenced in the workflows and mapped to corresponding identity bindings in Azure.

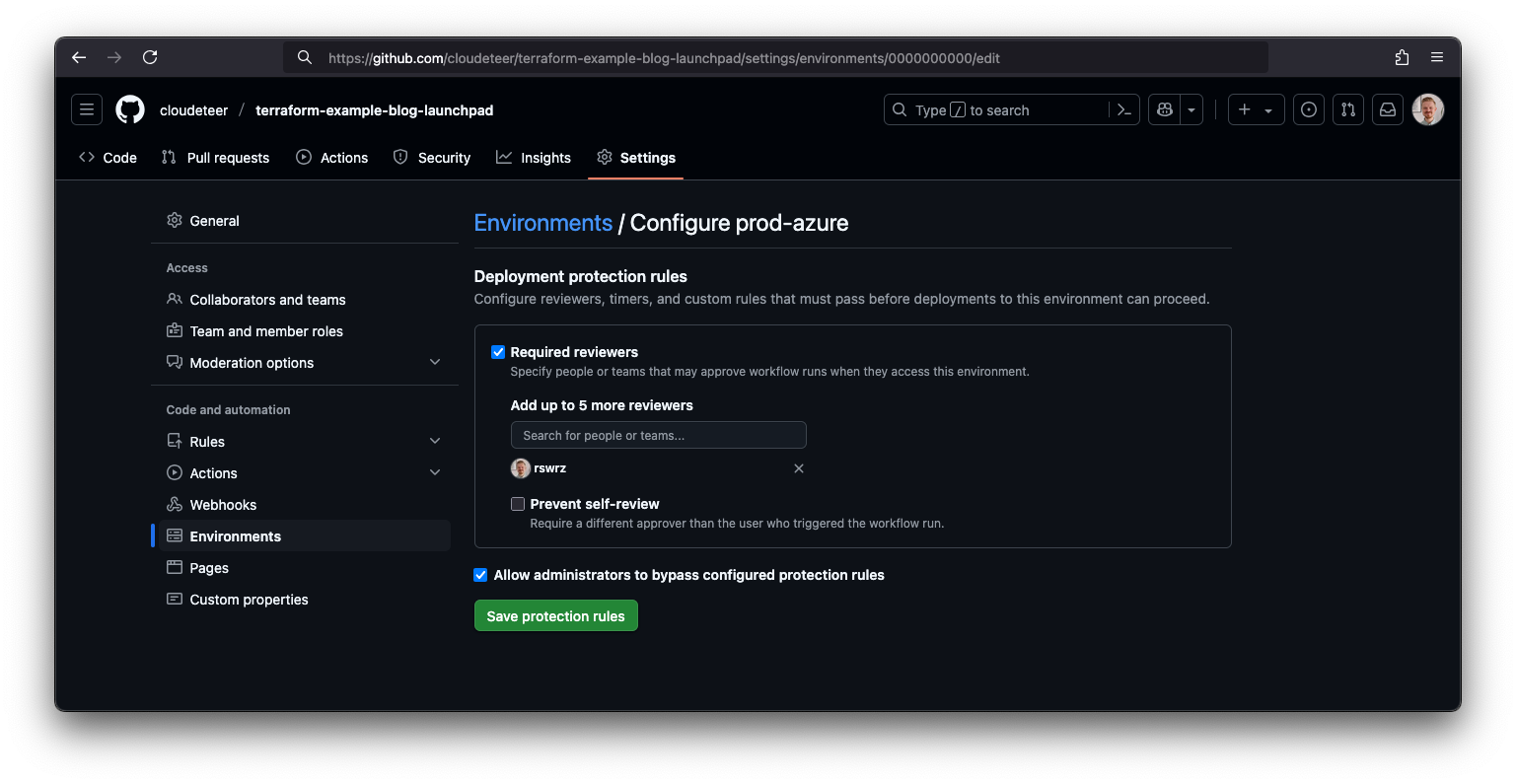

The third screenshot demonstrates how we use deployment protection rules to enforce manual approvals or additional checks before applying infrastructure changes. This helps ensure that all changes go through a controlled release process with built-in guardrails.

With this setup, we’ve already met two of our key goals:

- No static credentials – no secrets in code or CI/CD workflows ✔️

- Deployments only via GitHub Actions – no local apply ✔️

2. Secure Terraform State on Azure Blob Storage

Storing Terraform state securely is a top priority. Terraform state files may include sensitive information such as resource identifiers, keys, or metadata. To ensure confidentiality and integrity, we opted for Azure Blob Storage with strict access policies.

We decided to use Private Endpoints combined with role-based access to fully isolate state access from the public internet. The use of Managed Identity eliminates the need for access keys. In addition, we leverage storage features like versioning and soft delete to add resilience against data loss or unintended changes.

Key Measures

- Private Endpoint (no public access)

- Access only via Managed Identity (no shared keys)

- TLS 1.2 and server-side encryption

- Versioning, soft delete, and resource locks

- Geo-replication for high availability

Terraform Example: Blob Storage

Here’s how we provision a secure storage account with a private endpoint, define role assignments for managed identities, and configure state-related settings like versioning and soft delete.

resource "random_string" "this" {

length = 3

special = false

upper = false

}

resource "azurerm_storage_account" "this" {

name = "stlaunchpad${random_string.this.result}"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

account_tier = "Standard"

account_replication_type = "RAGRS"

allow_nested_items_to_be_public = false

default_to_oauth_authentication = true

infrastructure_encryption_enabled = true

public_network_access_enabled = false

shared_access_key_enabled = false

blob_properties {

versioning_enabled = true

}

}

resource "azurerm_storage_container" "this" {

name = "tfstate"

storage_account_id = azurerm_storage_account.this.id

container_access_type = "private"

}

resource "azurerm_private_endpoint" "this" {

name = "pe-stlaunchpad"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

subnet_id = azurerm_subnet.this.id

private_service_connection {

name = "blob"

is_manual_connection = false

private_connection_resource_id = azurerm_storage_account.this.id

subresource_names = ["blob"]

}

}

resource "azurerm_role_assignment" "this" {

principal_id = azurerm_user_assigned_identity.this.principal_id

scope = azurerm_storage_account.this.id

role_definition_name = "Storage Blob Data Owner"

}

resource "azurerm_management_lock" "this" {

name = "storage-account-lock"

lock_level = "CanNotDelete"

scope = azurerm_storage_account.this.id

}

By utilizing Azure Blob Storage with robust security measures, we have effectively mitigated critical security risks. This implementation marks another milestone in achieving our key objectives.

- Private Azure Blob Storage for Terraform state – with RBAC, encryption, and network restrictions ✔️

3. Self-hosted GitHub Runners on Azure VMSS

To access internal resources by private endpoints, our GitHub Actions workflows must run inside our virtual network. We use Azure Virtual Machine Scale Sets (VMSS) to host self-managed GitHub runners within a secure and controlled environment.

This setup ensures network-level isolation and allows us to tightly control updates, scaling, and operational policies. By avoiding GitHub-hosted runners, we eliminate the need to open public network access to internal resources.

By default, each virtual machine instance is configured to host five GitHub Actions runner processes. This configuration allows a single instance to handle five parallel GitHub Actions jobs efficiently. Through testing, we determined that five processes per instance is an optimal value for the Standard_D2plds_v5 machine size, balancing performance and cost-effectiveness. This approach ensures a scalable and economical solution for running self-hosted GitHub Actions workflows.

Features

- Full control over OS, network, and runtime

- Private communication with Azure services

- Auto-scaling and self-healing

- Supports rolling updates

Terraform Example: Azure VMSS

This example demonstrates how to define a Virtual Machine Scale Set (VMSS) in Terraform to host GitHub self-hosted runners, including networking, identity, and scaling configurations.

Instead of leveraging an Azure Private DNS Zone for resolving the Storage Account's Private Endpoint IP address, we adopt a simplified approach by passing the hostname and private IP address directly to the install_github_actions_runner.sh.tftpl template. When rendered, this script appends a static entry to the /etc/hosts file. This method minimizes complexity and reduces the overall infrastructure footprint.

To register the runner with GitHub, the runner_github_pat variable is required. This GitHub personal access token (PAT) must have the following repository-level scopes:

- Actions: read

- Administration: write (see Security Considerations below for details)

variable "runner_github_pat" {

type = string

sensitive = true

}

locals {

github_runner_script = base64gzip(

templatefile(

"${path.module}/install_github_actions_runner.sh.tftpl",

{

private_endpoint_storage_account_ip = one(azurerm_private_endpoint.this.private_service_connection[*].private_ip_address)

storage_account_hostname = azurerm_storage_account.this.primary_blob_host

runner_arch = "arm64"

runner_count = "5"

runner_user = "actions-runner"

runner_version = "latest"

runner_github_pat = var.runner_github_pat

runner_github_repo = var.runner_github_repo

}

)

)

}

resource "random_password" "this" {

length = 30

}

resource "azurerm_linux_virtual_machine_scale_set" "this" {

name = "vmss-launchpad"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

#

# Virtual Machine Authentication

#

admin_password = random_password.this.result

admin_username = "azureadmin"

disable_password_authentication = false

#

# Virtual Machine Instance Parameter

#

instances = 1

overprovision = false

sku = "Standard_D2plds_v5"

source_image_reference {

publisher = "Canonical"

offer = "ubuntu-24_04-lts"

sku = "server-arm64"

version = "latest"

}

network_interface {

name = "primary"

primary = true

ip_configuration {

name = "primary"

primary = true

subnet_id = azurerm_subnet.this.id

}

}

os_disk {

storage_account_type = "Standard_LRS"

caching = "ReadOnly"

disk_size_gb = 32

diff_disk_settings {

option = "Local"

placement = "CacheDisk"

}

}

#

# Instance Upgrade

#

upgrade_mode = "Automatic"

automatic_os_upgrade_policy {

disable_automatic_rollback = false

enable_automatic_os_upgrade = false

}

rolling_upgrade_policy {

max_batch_instance_percent = 100

max_unhealthy_instance_percent = 100

max_unhealthy_upgraded_instance_percent = 100

pause_time_between_batches = "PT0M"

}

#

# Runner Install Script

#

custom_data = base64encode("#cloud-config\n#${sha256(local.github_runner_script)}")

extensions_time_budget = "PT15M"

extension {

name = "github-actions-runner"

publisher = "Microsoft.Azure.Extensions"

type = "CustomScript"

type_handler_version = "2.0"

settings = jsonencode({

"skipDos2Unix" : true

})

protected_settings = jsonencode({

"script" = local.github_runner_script

})

}

#

# Automatic Instance Repair

#

automatic_instance_repair {

enabled = true

grace_period = "PT10M"

}

extension {

name = "health"

publisher = "Microsoft.ManagedServices"

type = "ApplicationHealthLinux"

automatic_upgrade_enabled = true

type_handler_version = "1.0"

settings = jsonencode({

protocol = "tcp"

port = 22

})

}

}

Self-hosted runners on Azure VMSS provide secure, isolated environments for our CI/CD workflows, ensuring full control over network access and runtime – requirement fulfilled:

- GitHub self-hosted runners – full control over runtime and networking ✔️

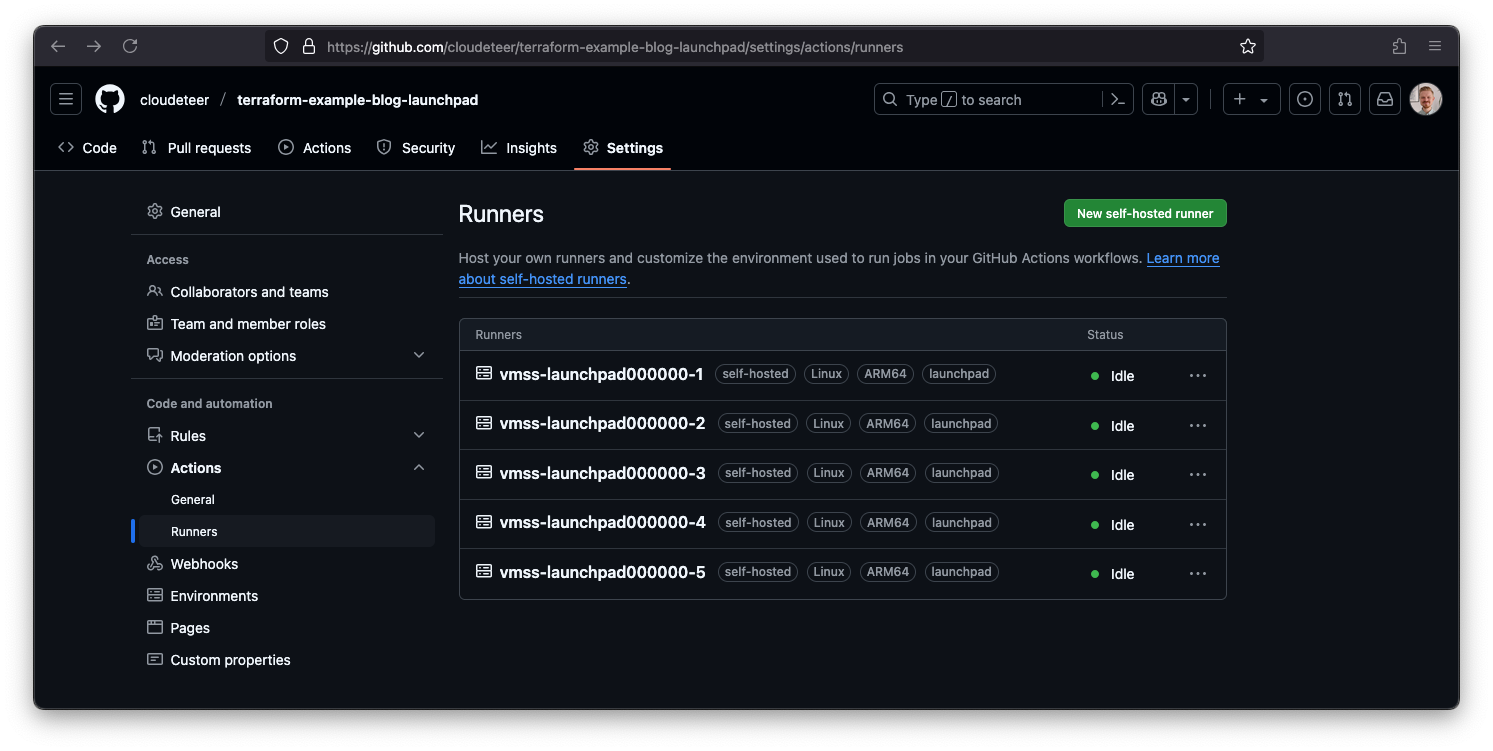

Once the runner is deployed and the GitHub PAT is used correctly, it will automatically register with the repository:

Installation Script

The runner setup leverages a templated shell script, install_github_actions_runner.sh.tftpl, executed via Azure's Custom Script Extension. This approach ensures sensitive information remains secure while enabling dynamic configuration during provisioning through Terraform Template Strings. The script automates the installation of dependencies, configures the GitHub Actions Runner service, and registers the virtual machine as a self-hosted runner in the specified GitHub repository.

ℹ️ NOTE – The script is executed using the Azure Custom Script Extension to ensure that the sensitive GitHub PAT is not embedded directly in the cloud-init shell scripts on the virtual machine, thereby enhancing security and reducing exposure risks.

⚠️ CAUTION – The Terraform Template Language uses the

${example}syntax for variables. Therefore, Bash parameter substitution in this template must be defined with a double dollar sign ($$). After being rendered by Terraform, this will result in a single dollar sign ($). For example,$${RUNNER_VERSION#v}will be rendered as${RUNNER_VERSION#v}.

Security Considerations and Future Improvements

Currently, generating a GitHub Actions registration token requires a GitHub PAT with repository administration privileges. While this introduces a security concern due to the elevated privileges of the token, there is no lower-privilege alternative available for this process at present. We are actively exploring solutions to eliminate the reliance on high-privilege static tokens in the future.

4. Central Terraform Module: CLOUDETEER Launchpad

To reduce duplication and simplify onboarding, we’ve encapsulated the above components into a reusable Terraform module. Our CLOUDETEER Launchpad 🚀 module provides a ready-to-use baseline for Azure infrastructure provisioning.

The module is available here:

- GitHub: cloudeteer/terraform-azurerm-launchpad

- Terraform Registry: cloudeteer/launchpad

- OpenTofu Registry: cloudeteer/launchpad

This module ensures consistent architecture across environments, supports secure defaults, and allows new projects to get started within minutes. Changes can be versioned and rolled out incrementally across tenants.

Goals

- Unified onboarding for new customers

- Reusability and maintainability

- Automatable upgrade path

- Enforcing top security standards through consistent maintenance workflows

Terraform Example: Terraform Module

This example demonstrates how to deploy the Launchpad module in a default configuration. For a comprehensive overview of available configuration options, refer to the module's official documentation.

The variables runner_github_pat and runner_github_repo should be dynamically provided at runtime during deployment. This can be achieved by setting the environment variables TF_VAR_runner_github_pat and TF_VAR_runner_github_repo prior to execution.

variable "runner_github_pat" {

type = string

sensitive = true

}

variable "runner_github_repo" {

type = string

}

resource "azurerm_resource_group" "launchpad" {

location = "germanywestcentral"

name = "rg-launchpad"

}

module "launchpad" {

source = "cloudeteer/launchpad/azurerm"

version = "0.8.0"

resource_group_name = azurerm_resource_group.launchpad.name

location = azurerm_resource_group.launchpad.location

runner_github_pat = var.runner_github_pat

runner_github_repo = var.runner_github_repo

virtual_network_address_space = ["192.168.42.0/27"]

subnet_address_prefixes = ["192.168.42.0/28"]

}

By providing and using our CLOUDETEER Launchpad, we’ve fulfilled the final requirement:

- Central Terraform modules – to establish standards, reduce maintenance, and simplify onboarding ✔️

Initial Launchpad Setup

We address the Chicken-and-Egg problem by performing the initial deployment of the Launchpad module from a developer's local machine. Once the foundational infrastructure is in place, we transition the process to a GitHub Actions workflow for ongoing management. The high-level steps involved are:

- Run the initial deployment locally.

- Set up the GitHub repository for CI/CD integration.

- Migrate the Terraform state to the remote backend.

- Commit the configuration and rely on GitHub Actions for all subsequent updates.

We’ll cover this onboarding process in a separate post. 👀

Conclusion

In this article, we’ve walked through the architecture and best practices behind our secure Azure deployment pipeline. The Launchpad – responsible for handling all future Azure deployments – serves as the foundational infrastructure for our customers’ environments, ensuring security, scalability, and consistency.

Key highlights of our approach:

- Launchpad module as the base for all Azure deployments

- Secure authentication with Azure Workload Identity Federation

- Secure storage for Terraform state on Azure Blob Storage

- Use of GitHub self-hosted runners for controlled, network-isolated execution

By leveraging the Launchpad Terraform module, new customers can quickly adopt secure, reproducible infrastructure deployments, while ensuring the integrity of all future deployments.

Contact & Discussion

We hope this breakdown gives you valuable insights into how we approach secure infrastructure deployments using Terraform, Azure, and GitHub Actions. The Launchpad module is not just a solution for our needs – it’s an open contribution to the community, allowing you to tailor and refine the approach for your own use cases.

We’d love to hear your feedback – visit our Contact page to explore the available ways to reach us.

Full Code Available on GitHub

The complete code for all examples and configurations discussed in this article is available in our GitHub repository: cloudeteer/terraform-example-blog-launchpad. You can explore the entire setup, including the Terraform scripts and configuration files.

Feel free to clone, modify, and contribute to the repository as needed.